Application Replication

This topic describes how to use a replication slice to replicate applications from one cluster to another.

Overview

The replication slice type is a new type of slice within the KubeSlice platform. It is designed specifically for efficiently transferring applications/resources from one cloud or data center cluster to another cloud or data center cluster. After the replication of namespaces is complete, they can be integrated into an application slice that manages them. Namespaces can be integrated into an application slice if it is beneficial for them to communicate with remote applications in the source cluster or any other.

A replication slice can also be used to back up or replicate namespaces by specifying the same cluster as the source and the destination during slice creation. The replicated namespaces can be restored later.

Replicate Applications Between Two Worker Clusters

Let us install the KubeSlice controller, register worker clusters, create a slice, and connect worker clusters to the slice. On the worker clusters, let us deploy the Boutique application microservices on a worker cluster.

-

You must clone the

examplesrepo as it contains all the required configuration files in theexamples/replication-demodirectory.Use the following command to clone the

examplesrepo:git clone https://github.com/kubeslice/examples.gitAfter cloning the repo, use the files from the

examples/replication-demodirectory.The following tree structure describes all the folders and files of the

examples/burst-demodirectory.├── boutique-app-manifests # Contains the Boutique application's frontend and backend services

│ ├── boutique-app.yaml # Contains the boutique app

├── kubeslice-cli-topology-template # Contains the topology template for installing the KubeSlice Controller and register two worker clusters

│ └── kubeslice-cli-topology-template.yaml

├── replication-job-config # Contains the replication job YAML configuration

│ └── replication-job-config.yaml

├── replication-slice-config # Contains the replication-slice YAML configuration

│ └── replication-slice-config.yaml -

You must install the KubeSlice Controller. Identify a controller cluster to install the KubeSlice Controller. Identify two worker clusters, worker-1 and worker-2 with the KubeSlice Controller. Use the following template to install the KubeSlice Controller and register the two worker clusters:

examples/replication-demo/kubeslice-cli-topology-template/kubeslice-cli-topology-template.yaml-

Modify the values as per your requirement. Refer to topology parameters for more details. Add the project name as

kubeslice-aveshain which you would create a replication slice. If you want to provide a different project name, then be sure to add that project while creating the replication slice.infoFor more information, see kubeslice-cli. Refer to installing the KubeSlice Controller to install the KubeSlice Controller and KubeSlice Manager using YAML.

noteKubeSlice Manager simplifies cluster registration and slice operations. To know more, see cluster operations.

-

Install KubeSlice using the following command on the controller cluster:

kubeslice-cli --config examples/replication-demo/kubeslice-cli-topology-template/kubeslice-cli-topology-template.yaml installRunning this command installs the KubeSlice Controller and registers worker-1 and worker-2 clusters with it.

-

On the controller cluster, create a namespace called

boutiqueusing the following command:kubectl create ns boutique -

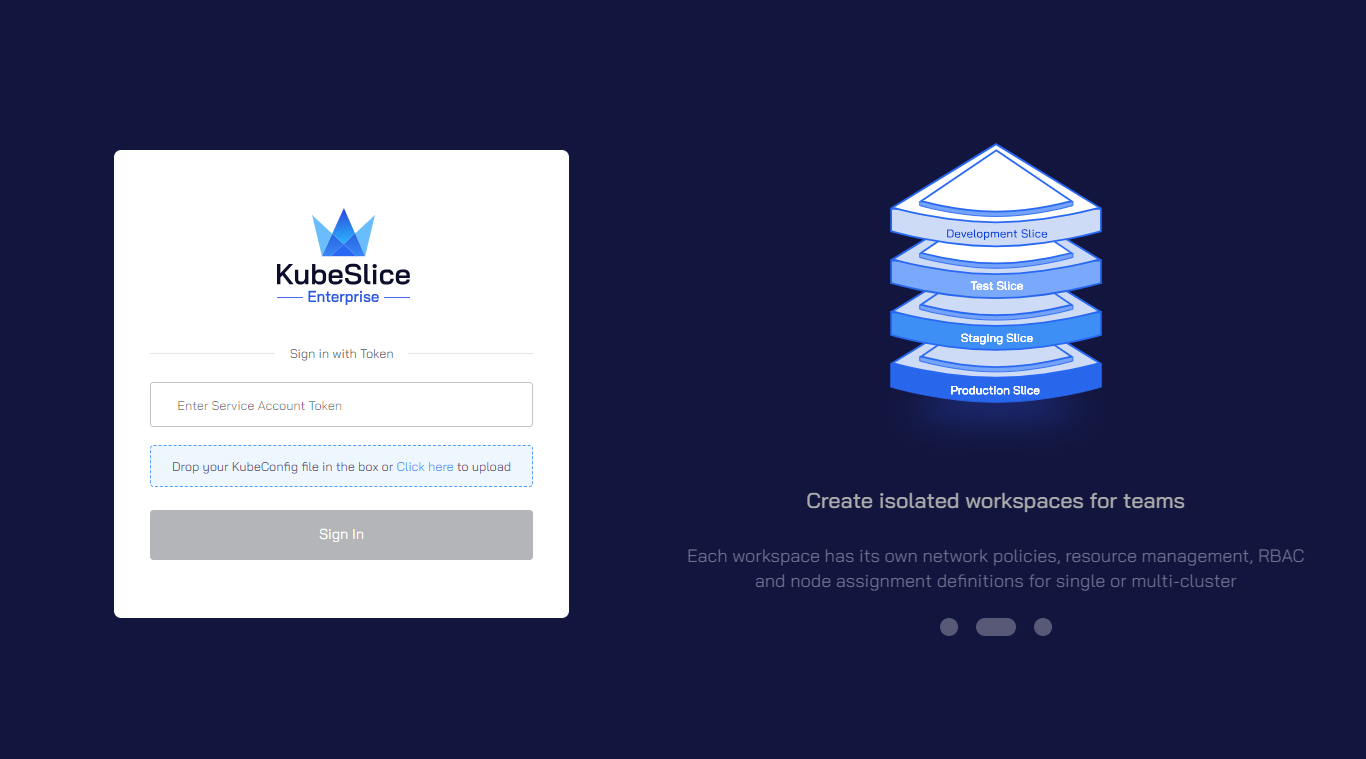

(Optional) To retrieve the endpoint/URL for accessing the KubeSlice Manager:

-

Run the following command on the KubeSlice Controller:

kubectl get service kubeslice-ui-proxy -n kubeslice-controller -o jsonpath='{.status.loadBalancer.ingress[0].ip}' -

The output should display the endpoint/URL in the following format:

https://<LoadBalancer-IP> -

Copy the endpoint/URL from the output and paste into your browser window to access the KubeSlice Manager.

-

You must create a service-account token to log in to the KubeSlice Manager. Create a service-account token using the following command:

noteThis command works for an admin with

admin-uias the username.kubectl get secret kubeslice-rbac-rw-admin-ui -o jsonpath="{.data.token}" -n kubeslice-avesha | base64 --decodeRunning this command returns a secret token. Use this token to log in to the KubeSlice Manager.

-

-

-

Deploy the Boutique application on the worker-1 cluster using the following command:

kubectl apply -f examples/burst-demo/boutique-app-manifests/boutique-app.yaml -n boutique-

Validate the Boutique application pods on the worker-1 cluster using the following command:

kubectl get pods -n boutiqueExpected Output

NAME READY STATUS RESTARTS AGE

dservice-5c6f8b77fb-7t6tf 1/1 Running 0 12s

cartservice-5445649469-fzc6l 1/1 Running 0 19s

checkoutservice-5cc44f775c-kkgn2 1/1 Running 0 8s

currencyservice-66f5c9f698-dgw2v 1/1 Running 0 17s

emailservice-59c44668d7-jrk5k 1/1 Running 0 9s

frontend-77464b4775-mzj5z 1/1 Running 0 5s

paymentservice-6c5465c467-q2fhj 1/1 Running 0 2s

productcatalogservice-c57fbfdf-vxh7g 1/1 Running 0 1s

recommendationservice-5677d665d6-9tgs8 1/1 Running 0 6s

redis-cart-bbf4477f4-gs6cn 1/1 Running 0 13s

shippingservice-5d94d4496f-njbdj 1/1 Running 0 16s -

Validate the Boutique application based on the following service type:

- For NodePort, use

http://externalip:nodeport/. - For LoadBalancer, use

https://<external-ip-of-LoadBalancer>/.

- For NodePort, use

-

Validate the services using the following command:

kubectl get svc -n boutiqueExpected Output

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

adservice ClusterIP 172.20.112.204 <none> 9555/TCP 1s

cartservice ClusterIP 172.20.249.101 <none> 7070/TCP 8s

checkoutservice ClusterIP 172.20.90.84 <none> 5050/TCP 17s

currencyservice ClusterIP 172.20.96.78 <none> 7000/TCP 7s

emailservice ClusterIP 172.20.117.63 <none> 5000/TCP 19s

frontend ClusterIP 172.20.28.197 <none> 80/TCP 14s

frontend-external LoadBalancer 172.20.190.246 <Load-Balancer> 80:31305/TCP 13s

paymentservice ClusterIP 172.20.60.15 <none> 50051/TCP 11s

productcatalogservice ClusterIP 172.20.114.176 <none> 3550/TCP 10s

recommendationservice NodePort 172.20.23.96 <none> 8080:32200/TCP 15s

redis-cart ClusterIP 172.20.227.236 <none> 6379/TCP 3s

shippingservice ClusterIP 172.20.213.101 <none> 50051/TCP 5s

-

-

On the controller cluster, create a replication slice called

boutique-migrationusing the following command:noteIn the command below,

kubeslice-aveshais the project name that you pass in the kubeslice-cli topology template. If you have passed a different project name, then add that project name in this command.kubectl apply -f examples/replication-demo/replication-slice-config/replication-slice-config.yaml -n kubeslice-avesha-

Use the following command to validate the slice configuration:

kubectl get migrationslice -n kubeslice-avesha

Expected Output

NAME AGE

boutique-migration 14s-

Use the following command to validate the source and destination worker clusters connected to the replication slice:

kubectl get workermigrationslice -n kubeslice-aveshaExpected Output

NAME AGE

boutique-migration-worker-1 20s

boutique-migration-worker-2 19s -

Switch to the worker 1 and worker 2 clusters, and use the following commands to verify the replication slice from the source or the destination worker cluster:

kubectl get deploy -n migrationExpected Output

NAME READY UP-TO-DATE AVAILABLE AGE

velero 1/1 1 1 35skubectl get bsl -n migrationExpected Output

NAME PHASE LAST VALIDATED AGE DEFAULT

kubeslice-boutique-migration Available 9s 83s

-

-

After validating the replication slice, switch to the controller cluster and deploy the replication job using the following command:

kubectl apply -f examples/replication-demo/replication-job-config/replication-job-config.yaml -n kubeslice-aveshaTo validate the replication job on the controller cluster:

-

Use the following command to validate the replication job configuration:

kubectl get migrationjobconfig -n kubeslice-aveshaExpected Output

NAME AGE

boutique-migration 43s -

Use the following command to validate the worker clusters connected to the replication slice:

kubectl get workermigrationjobconfig -n kubeslice-aveshaExpected Output

NAME AGE

boutique-migration-worker-1 49s

boutique-migration-worker-2 49s -

Switch to worker-1 cluster to validate the replication job on in it (the source worker cluster) using the following command:

kubectl get backup -n migrationExpected Output

NAME AGE

kubeslice-boutique-migration 9s -

On the worker-1 cluster, use the following command to check whether the backup was successful or not:

kubectl describe backup kubeslice-boutique-migration -n migration -

Switch to the worker-2 cluster and validate the replication job using the following command:

kubectl get restore -n migrationExpected Output

NAME AGE

kubeslice-boutique 9s -

On the worker-2 cluster, use the following the command to check whether the restore was successful or not:

kubectl describe restore kubeslice-boutique -n migration

-

-

After applying the replication job, check if the Boutique pods are running using the following command:

kubectl get pods -n boutiqueExpected Output

NAME READY STATUS RESTARTS AGE

dservice-5c6f8b77fb-7t6tf 1/1 Running 0 12s

cartservice-5445649469-fzc6l 1/1 Running 0 19s

checkoutservice-5cc44f775c-kkgn2 1/1 Running 0 8s

currencyservice-66f5c9f698-dgw2v 1/1 Running 0 17s

emailservice-59c44668d7-jrk5k 1/1 Running 0 9s

frontend-77464b4775-mzj5z 1/1 Running 0 5s

paymentservice-6c5465c467-q2fhj 1/1 Running 0 2s

productcatalogservice-c57fbfdf-vxh7g 1/1 Running 0 1s

recommendationservice-5677d665d6-9tgs8 1/1 Running 0 6s

redis-cart-bbf4477f4-gs6cn 1/1 Running 0 13s

shippingservice-5d94d4496f-njbdj 1/1 Running 0 16s -

After applying the replication job, check if the Boutique services are running using the following command:

kubectl get svc -n boutiqueExpected Output

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

adservice ClusterIP 172.20.112.204 <none> 9555/TCP 1s

cartservice ClusterIP 172.20.249.101 <none> 7070/TCP 8s

checkoutservice ClusterIP 172.20.90.84 <none> 5050/TCP 17s

currencyservice ClusterIP 172.20.96.78 <none> 7000/TCP 7s

emailservice ClusterIP 172.20.117.63 <none> 5000/TCP 19s

frontend ClusterIP 172.20.28.197 <none> 80/TCP 14s

frontend-external LoadBalancer 172.20.190.246 <Load-Balancer> 80:31305/TCP 13s

paymentservice ClusterIP 172.20.60.15 <none> 50051/TCP 11s

productcatalogservice ClusterIP 172.20.114.176 <none> 3550/TCP 10s

recommendationservice NodePort 172.20.23.96 <none> 8080:32200/TCP 15s

redis-cart ClusterIP 172.20.227.236 <none> 6379/TCP 3s

shippingservice ClusterIP 172.20.213.101 <none> 50051/TCP 5s