HPA with Datadog

This topic describes how to configure HPA to read pod scaling recommendations from Datadog.

The Smart Scaler agent chart, version 2.0.2 and later, supports Datadog to extract metrics from the Inference Agent. The HPA can then read the extracted metrics from Datadog.

Please read this before you begin!

You must expose the Smart Scaler metrics to Datadog and enable external metrics in the Datadog agent of an application cluster. There can be the following possible scenarios based on the cluster location where the Smart Scaler agent, application with its corresponding HPA configuration, and the Datadog agent run:

-

Scenario 1: When the Smart Scaler agent, application with its corresponding HPA configuration, and the Datadog agent run on the same cluster, then exposing the smart scaler metrics and enabling external metrics API in the Datadog agent must be done on the same cluster.

-

Scenario 2: When the Smart Scaler agent runs on a separate cluster, application with its corresponding HPA configuration, and the Datadog agent run on a different cluster, then:

- Exposing the smart scaler metrics must be done on the Smart Scaler agent cluster

- Enabling external metrics API in the Datadog agent must be done on the Datadog agent cluster for HPA to consume the Smart Scaler metrics

Be sure that the Datadog agent and application with its corresponding HPA configuration are always on the same cluster.

For event scaling with Smart Scaler agent versions 2.9.28 or earlier, each application deployment in a namespace must have its own configured HPA. Without an individual HPA, event scaling fails.

However, starting with Smart Scaler agent versions 2.9.29 and later, event scaling no longer requires an individual HPA for each application deployment.

Expose the Smart Scaler Metrics to Datadog

You must expose the Smart Scaler metrics to Datadog, from which the HPA will extract them.

Step 1: Create a ConfigMap for OpenMetrics

-

Create a

conf.yamlfile that points theopenmetrics_endpointto theinference-agent-serviceusing the following template.init_config:

instances:

- openmetrics_endpoint: http://inference-agent-service.smart-scaler.svc.cluster.local:8080/metrics

namespace: "smart-scaler"

metrics:

- smartscaler_hpa_num_pods: smartscaler_hpa_num_pods -

Create the

openmetrics-configConfigMap in thedatadognamespace using the following command:kubectl create configmap openmetrics-config -n datadog --from-file=conf.yaml

Step 2: Upgrade the Datadog Chart

-

Add the following settings to the existing configuration in the

datadog-values.yamlfile.noteThe ConfigMap that you created in step 2 is called out under

agents.agents:

volumeMounts:

- name: openmetrics-config-1

mountPath: /etc/datadog-agent/conf.d/openmetrics.d/

volumes:

- name: openmetrics-config-1

configMap:

name: openmetrics-config

items:

- key: conf.yaml

path: conf.yaml

clusterAgent:

enabled: true

metricsProvider:

enabled: true

useDatadogMetrics: true -

Upgrade the Datadog chart with the updated

datadog-values.yamlfile with the following command:helm upgrade --install datadog -f datadog-values.yaml datadog/datadog -n datadog

Step 3: Verify the External Metrics API

After upgrading Datadog, you can verify if the external metrics API has been properly configured using the following steps:

-

List API services: Run the following command to list all API services across namespaces:

kubectl get apiservice -A -

Filter by external metrics API: Look for the

v1beta1.external.metrics.k8s.ioAPI service. You can usegrepto filter the results:kubectl get apiservice -A | grep v1beta1.external.metrics.k8s.io -

Verify the service status: Ensure that the service is listed as

True, indicating that it is available and functioning properly:v1beta1.external.metrics.k8s.io datadog/datadog-cluster-agent-metrics-api True 11hIn this output,

datadog/datadog-cluster-agent-metrics-apishould be listed with a status ofTrue, which confirms that the Datadog external metrics API is operational after the upgrade.

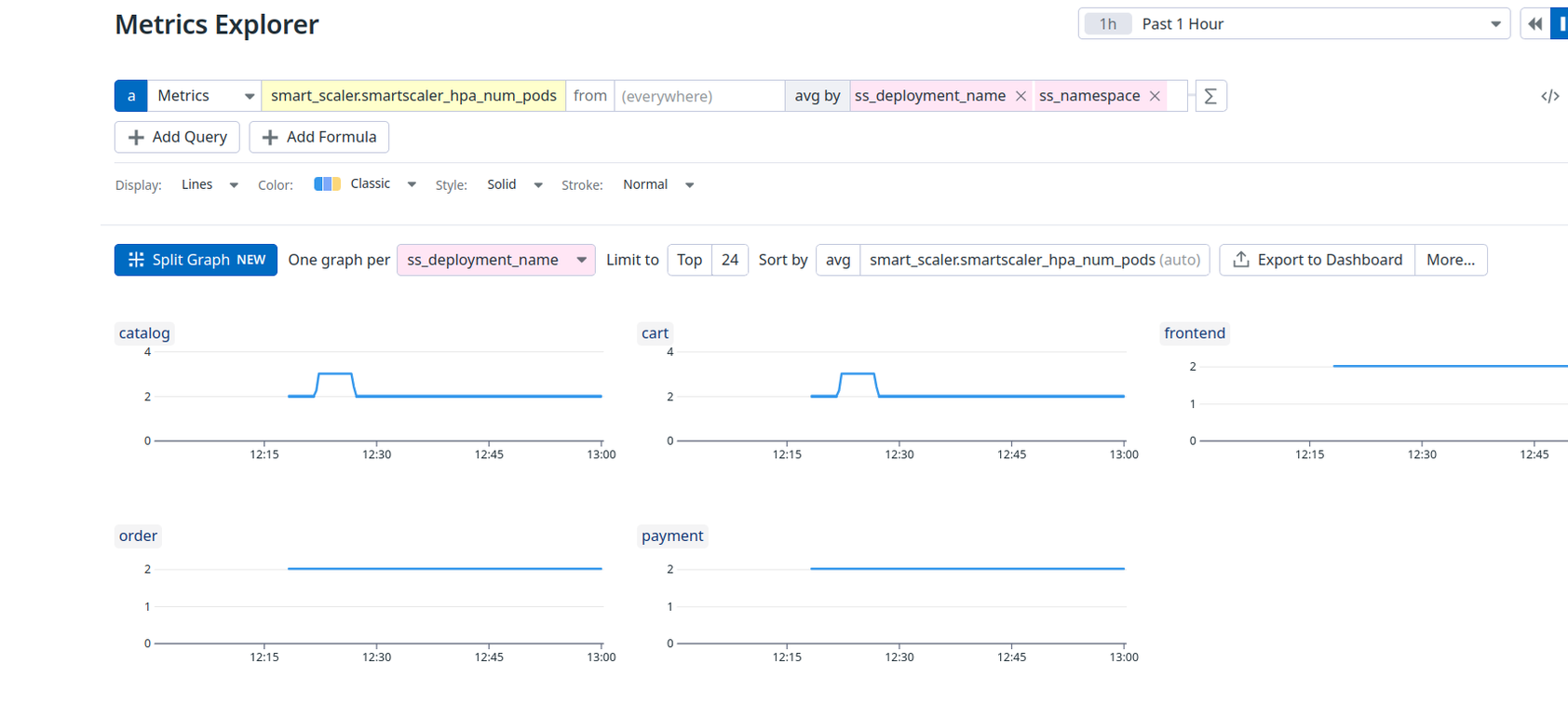

Step 4: Verify the Metrics on the Datadog Dashboard

Verify the Smart Scaler metrics by visualizing them on the Datadog dashboard.

The following figure illustrates the scaling recommendation metric, smartscaler_hpa_num_pods.

Enable the External Metrics API in the Datadog Agent of an Application Cluster

You must have the Smart Scaler metrics to Datadog before enabling the external metrics API to the Datadog agent of an application cluster.

Step 1: Modify the Datadog Agent Configuration

-

Add the following settings to the Datadog Agent values file to enable external metrics API:

clusterAgent:

enabled: true

metricsProvider:

enabled: true

useDatadogMetrics: true -

Upgrade the Datadog chart with the updated

datadog-values.yamlfile with the following command:helm upgrade --install datadog -f datadog-values.yaml datadog/datadog -n datadog

Step 2: Verify the External Metrics API

After upgrading Datadog, you can verify if the external metrics API has been properly configured using the following steps:

-

List API services: Run the following command to list all API services across namespaces:

kubectl get apiservice -A -

Filter by external metrics API: Look for the

v1beta1.external.metrics.k8s.ioAPI service. You can usegrepto filter the results:kubectl get apiservice -A | grep v1beta1.external.metrics.k8s.io -

Verify the service status: Ensure that the service is listed as

True, indicating that it is available and functioning properly:v1beta1.external.metrics.k8s.io datadog/datadog-cluster-agent-metrics-api True 11hIn this output,

datadog/datadog-cluster-agent-metrics-apishould be listed with a status ofTrue, which confirms that the Datadog external metrics API is operational after the upgrade.

Step 3: Create an HPA Object to Read Recommendations

You must create an HPA object that points to your Datadog data source to collect pod scaling recommendations.

-

Create an HPA object file called

smart-scaler-hpa.yamlthat points to thesmartscaler_hpa_num_podsmetric to scale the deployments using the following template.apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: <hpa name>

namespace: <namespace>

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx

behavior:

scaleDown:

policies:

- type: Pods

value: 4

periodSeconds: 60

- type: Percent

value: 10

periodSeconds: 60

stabilizationWindowSeconds: 10

minReplicas: 2

maxReplicas: 30

metrics:

- type: External

external:

metric:

name: smart_scaler.smartscaler_hpa_num_pods

selector:

matchLabels:

ss_deployment_name: "<deployment name>"

ss_namespace: "<namespace>"

ss_cluster_name: "<cluster name>"

target:

type: AverageValue

averageValue: "1" -

Apply the

smart-scaler-hpa.yamlobject file using the following command:kubectl apply -f smart-scaler-hpa.yaml -n <application-namespace>

The HPA will now collect pod scaling recommendations from your Datadog data source.