Smart Scale an Application

This topic provides step-by-step instructions to install and configure the Smart Scaler for onboarding an application.

Prerequisites and Infrastructure Setup

The following section assumes you have the following prerequisites and infrastructure setup:

- You are using GitOps for your workload deployments.

- You are collecting your application metrics using Datadog (deployed using the helm chart and not operator).

Understand the Infrastructure Setup

Let us understand the setup with an example:

- You have a microservice application that has been deployed in a cluster using ArgoCD.

- The application has a deployment and a service.

- You have also created a Horizontal Pod Autoscaler (HPA) for the deployment.

- The microservice is wrapped in a helm chart. The helm chart has a values.yaml file that defines the configuration for the deployment and the HPA.

Visit our example git repository here to better understand the setup.

| Required Tools | Purpose |

|---|---|

| Argo CD | Required for automatically synchronizing and deploying your application whenever a change is made in your GitHub repository. |

| GitHub | Currently, this is the only supported version control repository. |

Required Packages

| Package Required | Version | Installation Instructions |

|---|---|---|

| Git | 2.43.0 or later | Installing Git |

| Helm | 3.15.0 or later | Installing Helm |

| kubectl | 1.30.0 or later | Installing kubectl |

Step 1: Download the Smart Scaler Values File

-

Add the Smart Scaler helm repository using the following command:

helm repo add smart-scaler https://smartscaler.nexus.aveshalabs.io/repository/smartscaler-helm-ent-prod/ -

Download the

ss-agent-values.yamlfile by visiting the Deploy Agents page on the Smart Scaler management console. You must modify the values in this file and use it to install the Smart Scaler Agent.

Step 2: Install the Smart Scaler Agent

-

Modify the

ss-agent-values.yamlfile that you downloaded in step 1 to include the following values:agentConfiguration:

# DO NOT CHANGE THIS

host: https://gateway.saas1.smart-scaler.io

# IMPORTANT: USER NEEDS TO CHANGE CLUSTERDISPLAYNAME TO MATCH THE CLUSTER NAME YOU DEFINED IN DATADOG/PROMETHEUS/NEW RELIC OR ANOTHER METRICS DATA SOURCE

clusterDisplayName: smart-scaler-agent

# DO NOT CHANGE THIS

clientID: tenant-<tenantID>

# DO NOT CHANGE THIS

clientSecret: <Avesha-provided secret in your ss-agent-values.yaml file>

namedDataSources:

- name: "" # This name will be used in your configurations to refer to the datasource

datasourceType: "" # prometheus/datadog

url: "" # URL of the datasource

credentials:

username: "" # (Optional) only required for prometheus

password: "" # (Optional) only required for prometheus

apiKey: "" # (Optional) only required for datadog

appKey: "" # (Optional) only required for datadog

controllerAccount: "" # (Optional) only required for appdynamics # AppDynamics support is coming soon

clientName: "" # (Optional) only required for appdynamics # AppDynamics support is coming soon

clientSecret: "" # (Optional) only required for appdynamics # AppDynamics support is coming soon

grantType: "" # (Optional) only required for appdynamics # AppDynamics support is coming soon

accessTokenPath: "" # (Optional) only required for appdynamics # AppDynamics support is coming soon -

Deploy the agent in the cluster by running the following command:

infoIn the command below, remove the

--set ebpf.enabled=trueoption if you do not use eBPF as the monitoring tool.helm install smartscaler smart-scaler/smartscaler-agent -f ss-agent-values.yaml -n smart-scaler --create-namespace --set ebpf.enabled=true

You can use other ways to deploy this if you want to use the GitOps approach.

Verify the Agent Installation

Run the following command to verify the installation:

kubectl get pods -n smart-scaler

Expected Output

NAME READY STATUS RESTARTS AGE

agent-controller-manager-56476b5676-jfhst 2/2 Running 0 75s

git-operations-5476c685c6-nc79m 1/1 Running 0 75s

inference-agent-5b8f87c49f-w9zw4 0/1 CrashLoopBackOff 3 (29s ago) 75s

In the above output, the Inference Agent is in an error state as it restarts in a loop. This error is expected at this point because the Inference Agent configuration is not yet complete. (You can also observe the error loading the configuration by looking at the log for the inference agent.) Proceed to step 3.

Step 3: Configure the Application for Basic Smart Scaler Support

This section describes how to config an application for Smart Scaling. Note that the configuration also supports configuration for ArgoCD-based Event Scaling. The Git-related configuration for ArgoCD-based Event Scaling is described later.

To configure the application, you must define your application infrastructure. The topic also assumes that you have configured custom values overrides for your HPAs.

Define your Application Configuration for the Smart Scaler Agent

Installing a Smart Scaler Agent defines the ConfigMap. To gain more flexibility, you can remove the default ConfigMap and create a new ConfigMap.

Use this command to remove the default ConfigMap that comes with the Smart Scaler Agent installation:

kubectl delete configmap <configmap-name> -n <namespace>

Example

kubectl delete configmap smart-scaler-config -n smart-scaler

-

Create an

ss-appconfig-values.yamlfile.agentConfiguration:

# This will be pre populated by SmartScaler

clientID: tenant-<tenant ID>

namedDataSources:

- name: "" # This is the same name that you used in the agent configuration

datasourceType: "" # This is the type of the datasource

url: "" # URL of the datasource

namedGitSecrets:

# This is the secret that will be used to access the git repository for Event Auto-scaling. Use as many secrets as you might need for different repositories.

- name: "" # This is the name of the secret. This will be used in the ApplicationConfig to refer the secret.

gitUsername: "" # Github Username (plaintext)

gitPassword: "" # PAT token (plaintext)

url: "" # URL of the git repository

configGenerator:

apps:

- app: "" # name of the application. e.g. awesome-app

app_version: "" # version of the application. e.g. 1.0

namedDatasource: "" # reference to the namedDataSources. e.g. datadog

default_fallback: 3 # Default fallback value

use_collector_queries: false # Use only datasource collector queries

gitConfig: # These are global configurations for the git repository. It will be a fallback if the deployment specific configurations are not provided. You can skip this if you don't want to use the global configurations.:

branch: "" # branch of the git repo. e.g. main

repository: "" # url of the git repo where deployment charts are stored. e.g. https://github.com/smart-scaler/smartscaler-tutorials

secretRef: "" # reference to the namedGitSecrets.

clusters:

- name: "" # name of the cluster where the application is deployed. This should be same as your datadog metrics label. e.g. my-awesome-cluster

namespaces:

- name : "" # name of the namespace. e.g. acmefitness

metricsType : "" # type of the metrics. e.g. istio

deployments: # list of k8s deployments:

- name: "" # name of your k8s application deployment. e.g. cart

fallback: 3 # Fallback value

eventConfig: # configurations for event auto-scaling:

gitPath: "" # path to the values.yaml file in the git repo. This is the location of the values file where you made the changes to enable SmartScaler. e.g. custom-values.yaml

gitConfig: # These are the configurations for the git repository. It will override the global configurations.:

branch: "" # branch of the git repo for the deployment. e.g. main

repository: "" # url of the git repo where deployment charts are stored. e.g. https://github.com/smart-scaler/smartscaler-tutorials

secretRef: "" # reference to the namedGitSecrets

# Add more deployments here -

Deploy the configuration by running the following command:

helm install smartscaler-configs smart-scaler/smartscaler-configurations -f ss-appconfig-values.yaml -n smart-scalerThe helm template will generate the necessary

Queries and ApplicationConfigconfigurations based on your input and create the desired ConfigMap for the Inference Agent andApplicationConfig CRfor the event autoscaler.

Verify the Configuration

Run the following command to verify the configuration:

kubectl get applicationconfigs.agent.smart-scaler.io -n smart-scaler

Expected Output

NAME AGE

awesome-app 10s

Run the following command to verify the ConfigMap:

kubectl get configmap -n smart-scaler

Expected Output

NAME DATA AGE

agent-controller-autoscaler-config 2 1m

agent-controller-manager-config 1 1m

config-helper-ui-nginx 1 1m

kube-root-ca.crt 1 1m

smart-scaler-config 1 1m

Monitor the Metrics

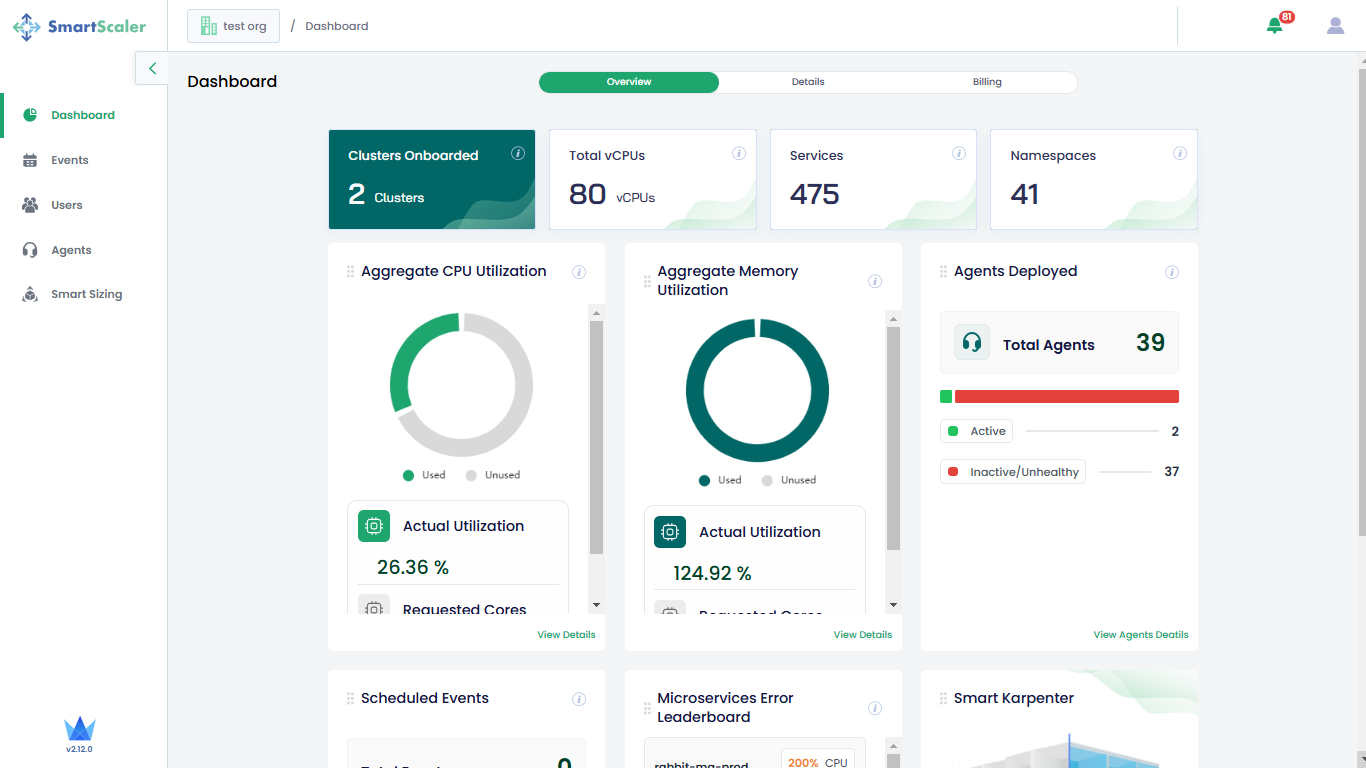

If the verified Smart Scaler configuration looks good, log in to the Smart Scaler management console and verify that your application and services appear in the Dashboard pick lists. Verify that No of Pods, CPU Usage, and Requests Served (SLO) look right.

If the No of Pods, CPU Usage, and Requests Served (SLO) look good, you have successfully installed and configured the Smart Scaler. You can now start using the Smart Scaler to autoscale your applications.

If they are missing, you can troubleshoot with some of the following steps:

-

Make sure the Inference Agent is running successfully using the following command:

kubectl get pods -n smart-scalerExpected Output

NAME READY STATUS RESTARTS AGE

agent-controller-manager-56476b5676-jfhst 2/2 Running 0 86m

git-operations-5476c685c6-nc79m 1/1 Running 0 86m

inference-agent-5b8f87c49f-hc77v 1/1 Running 0 4m54s -

If not, or if it is but you still don't see your application on the SaaS management console, check the logs for the agent using the following command:

kubectl logs -n smart-scaler inference-agent-5b8f87c49f-hc77v -

In the output, check if the log ends with the following levels:

{"level":"info","ts":1722545554.283851,"msg":"Metrics sent successfully for app &{App:boutique AppVersion:1.0 Namespace: ClusterName:}"}

{"level":"debug","ts":1722545563.1009502,"msg":"errorCount value = 0"}If it ends with the above levels, your agent is communicating successfully with the Smart Scaler cloud.

(If not, it is not communicating.) If it is communicating, but the Smart Scaler cloud does not have the right information, there is probably a problem with your application configuration. Look at the log in more detail. You should see the following periodic blocks.{"level":"info","ts":1722545322.270564,"msg":"Namespace: demo"}

{"level":"debug","ts":1722545322.2759717,"msg":"filtering deployments for namespace demo"}

{"level":"debug","ts":1722545322.275996,"msg":"Filtered deployment list: [adservice cartservice checkoutservice emailservice frontend paymentservice productcatalogservice recommendationservice shippingservice]"}

{"level":"info","ts":1722545322.6678247,"msg":"Metrics sent successfully for app &{App:boutique AppVersion:1.0 Namespace: ClusterName:}"}

{"level":"debug","ts":1722545323.1008768,"msg":"errorCount value = 0"}This shows successful collection of data for the services specified by your configuration file.

If you are missing services you expect to see of errors trying to collect them. Look at your configuration file and verify that thenamedDataSourcesare correctly configured for where to collect the data and the application namespaces and deployments are correctly identified.If you are still stuck, then contact Avesha Support.

You can create events from the management console to trigger autoscaling based on planned event times.

Configure HPA

After you have established communication with the Smart Scaler cloud, you must configure HPA to listen to the recommendations (when they are available).

You must add the configuration in the helm chart that wraps the microservice. The helm chart

contains a values.yaml file that defines the configuration for the deployment and the HPA.

-

Add the following snippet to your helm chart

values.yamlfile to configure HPA to use a supported metric agent as an external metric source.smartscaler:

enabled: false

minReplicas: 1

maxReplicas: 10

metrics:

- type: External

external:

metric:

name: smart_scaler.smartscaler_hpa_num_pods

selector:

matchLabels:

ss_deployment_name: "<deployment name>"

ss_namespace: "<namespace>"

ss_cluster_name: "<cluster name>"

target:

type: AverageValue

averageValue: "1" -

You must:

- Modify the HPA template to consume the data from the above values.

- Add this configuration in each deployment that you want to autoscale.

Visit the HPA with documentation to understand how to configure Datadog or Prometheus to scrape the metrics from the Inference Agent.