Manage Inference Endpoints

An Inference Endpoint is a hosted service to perform inference tasks such as making predictions or generating outputs using a pre-trained AI model. It enables real-time or batch processing for AI tasks like natural language processing and speech recognition. An Inference Endpoint serves as the operational interface to deploy AI models to users or applications.

This topic delves with managing Inference Endpoints on the EGS platform. An admin can create and manage multiple Inference Endpoints.

Across our documentation, we refer to the workspace as the slice workspace. The two terms are used interchangeably.

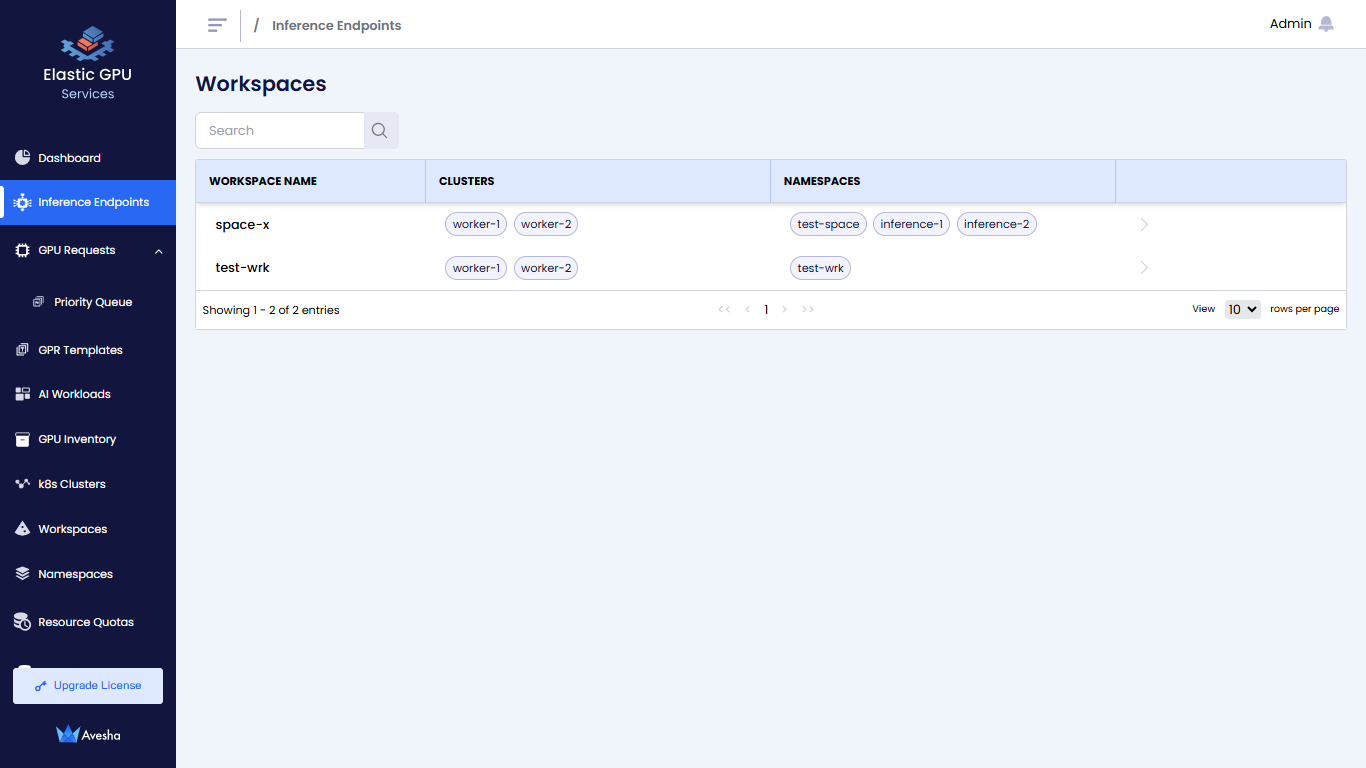

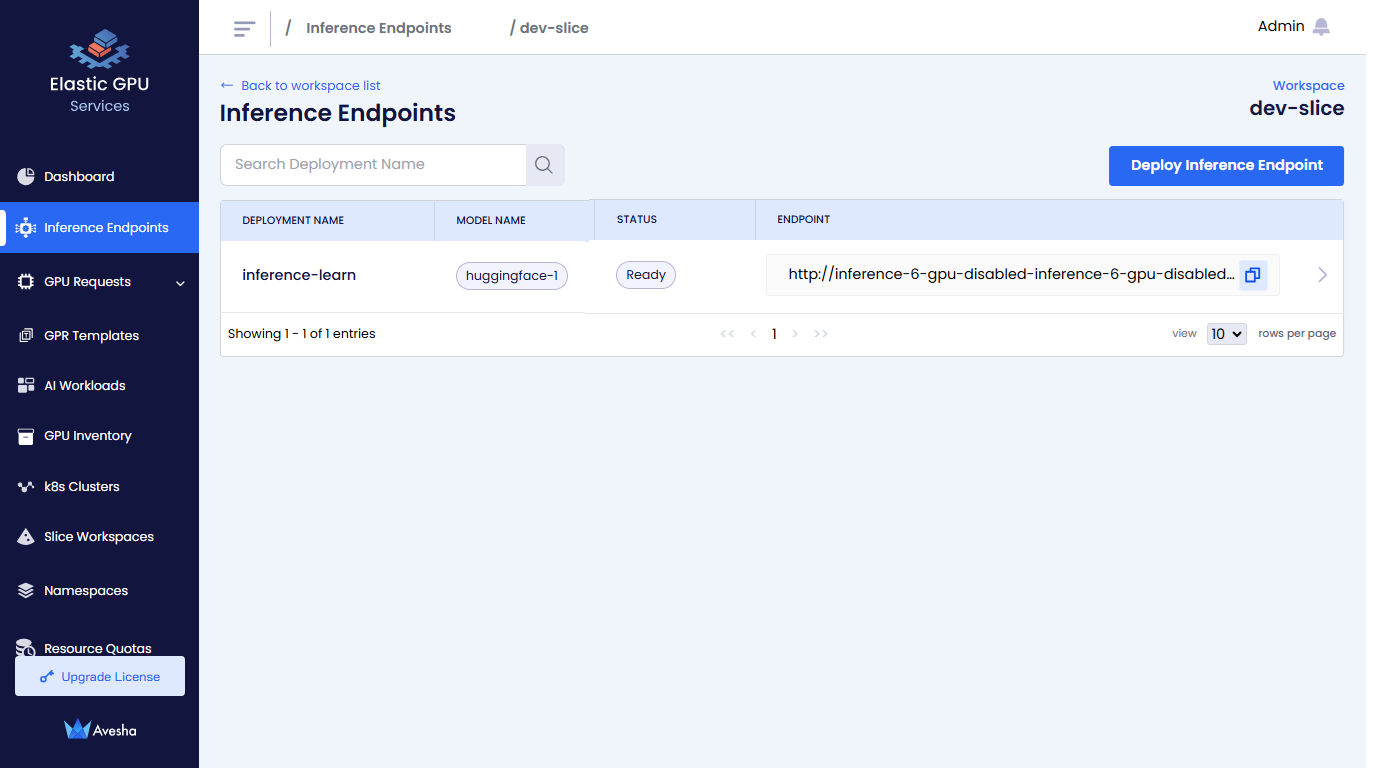

View Inference Endpoints

-

Go to Inference Endpoints on the left sidebar.

-

On the Workspaces page, click a workspace whose Inference Endpoints you want to view.

-

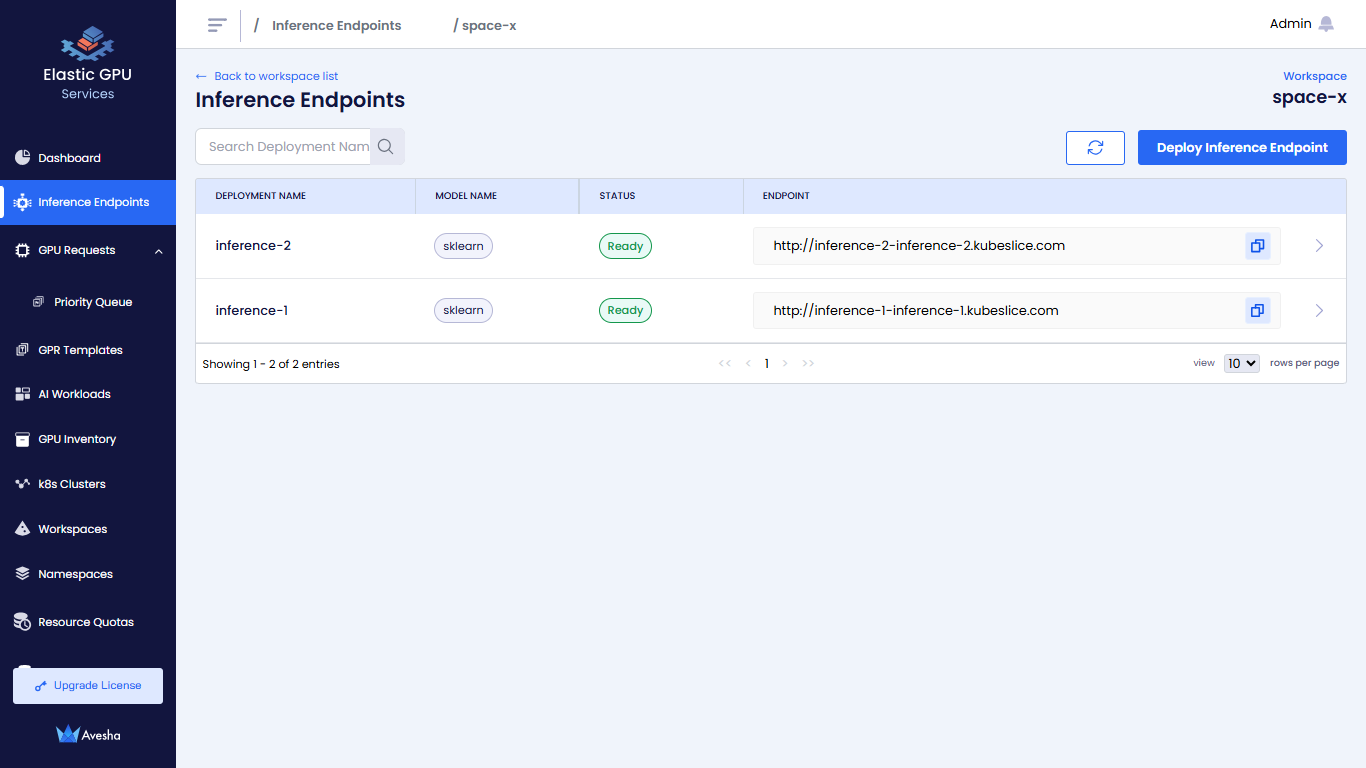

On the Inference Endpoints page, you see a list of Inference Endpoints for that workspace.

-

Click the

>icon for the Inference Endpoint that you want to view.

Deploy an Inference Endpoint

-

Go to Inference Endpoint on the left sidebar.

-

On the Workspaces page, go to the workspace on which you want to deploy an Inference Endpoint.

-

On the Inference Endpoints page, click Deploy Inference Endpoint.

-

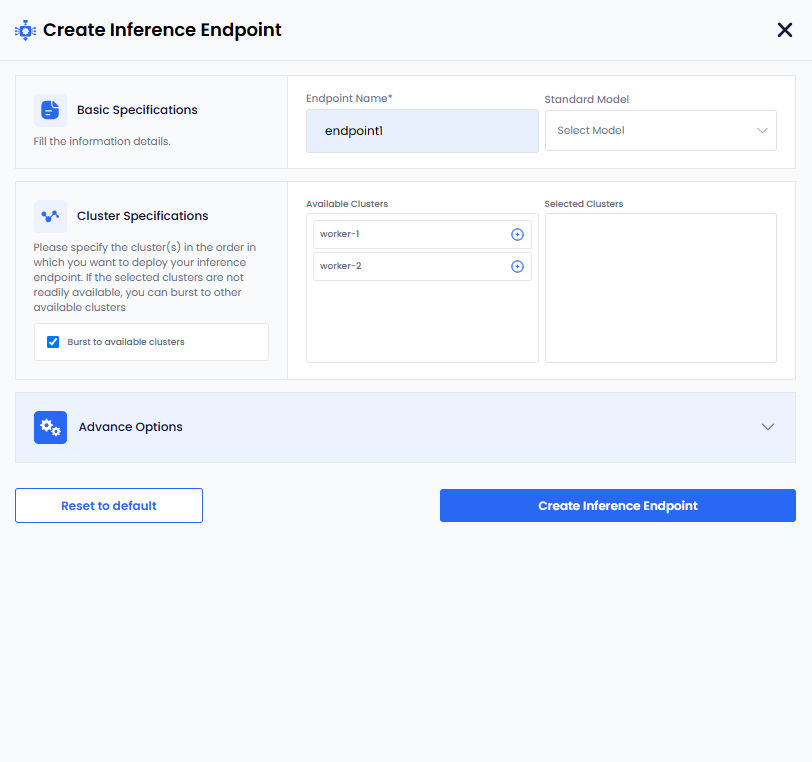

On the Create Inference Endpoint pane, under Basic Specifications:

-

Enter a name for the Inference Endpoint in the Endpoint Name text box.

-

Select standard model name from the Select Model dropdown menu. To populate the Select Model dropdown menu, with standard model names you must configure the configMap to store the list of standard models.

-

-

Under Cluster Specifications:

-

(Optional) The checkbox Burst to available clusters is enabled by default. You can uncheck the checkbox to disable bursting. To know more, see Bursting Scenarios.

-

Under Available Clusters, select the clusters in the order on which you want to deploy an Inference Endpoint.

-

-

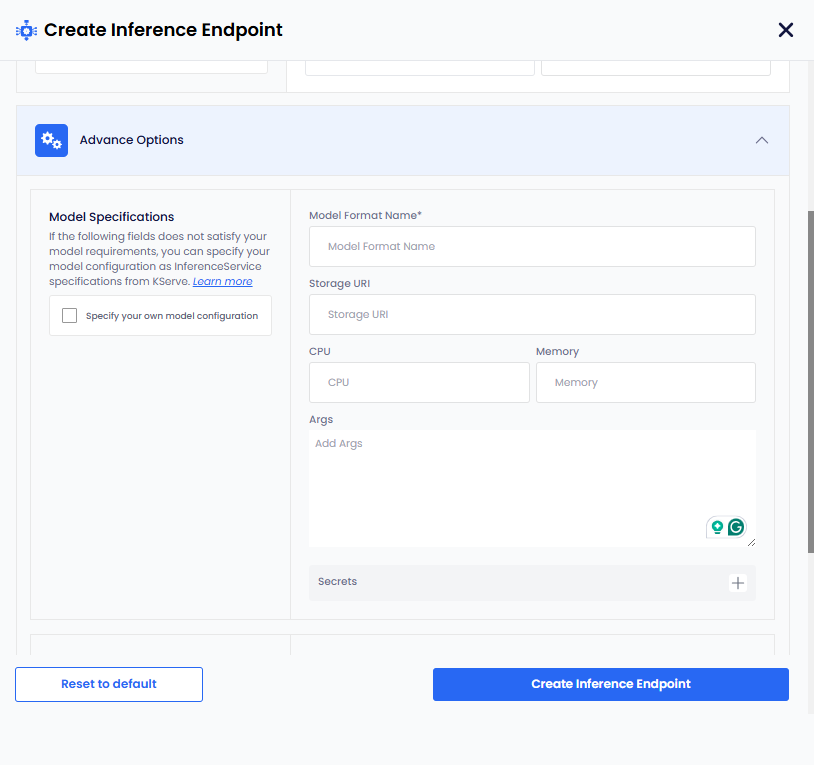

Under Advanced Options, for Model Specifications:

infoThe following parameters are standard and work for most models. However, if these parameters do not meet your model requirements, then select the Specify your own model configuration checkbox. To know more, see own model configuration.

- Enter a name in the Model Format Name text box.

- Add the storage URI in the Storage URI text box.

- Add the CPU value in the CPU text box.

- Add the Memory value in the Memory text box.

- Add the arguments in the Args text box.

- To add secret key-value pair, click the plus sign against Secret and add them.

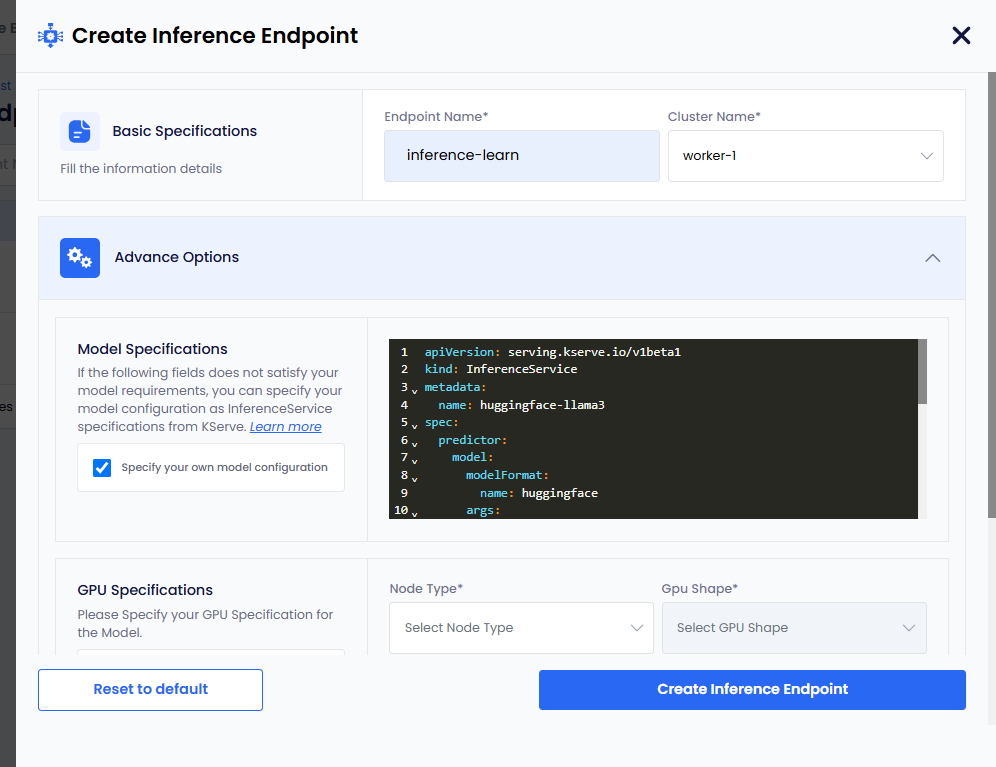

Own Model Configuration

When the parameters provided under Model Specifications do not meet your model requirements, you can select the Specify your own model configuration checkbox.

To add your own model configuration:

-

Select the Specify your own model configuration checkbox, which provides you a terminal screen.

-

On the terminal screen, specify your model configuration as InferenceService specifications from KServe. For more information, see KServe.

-

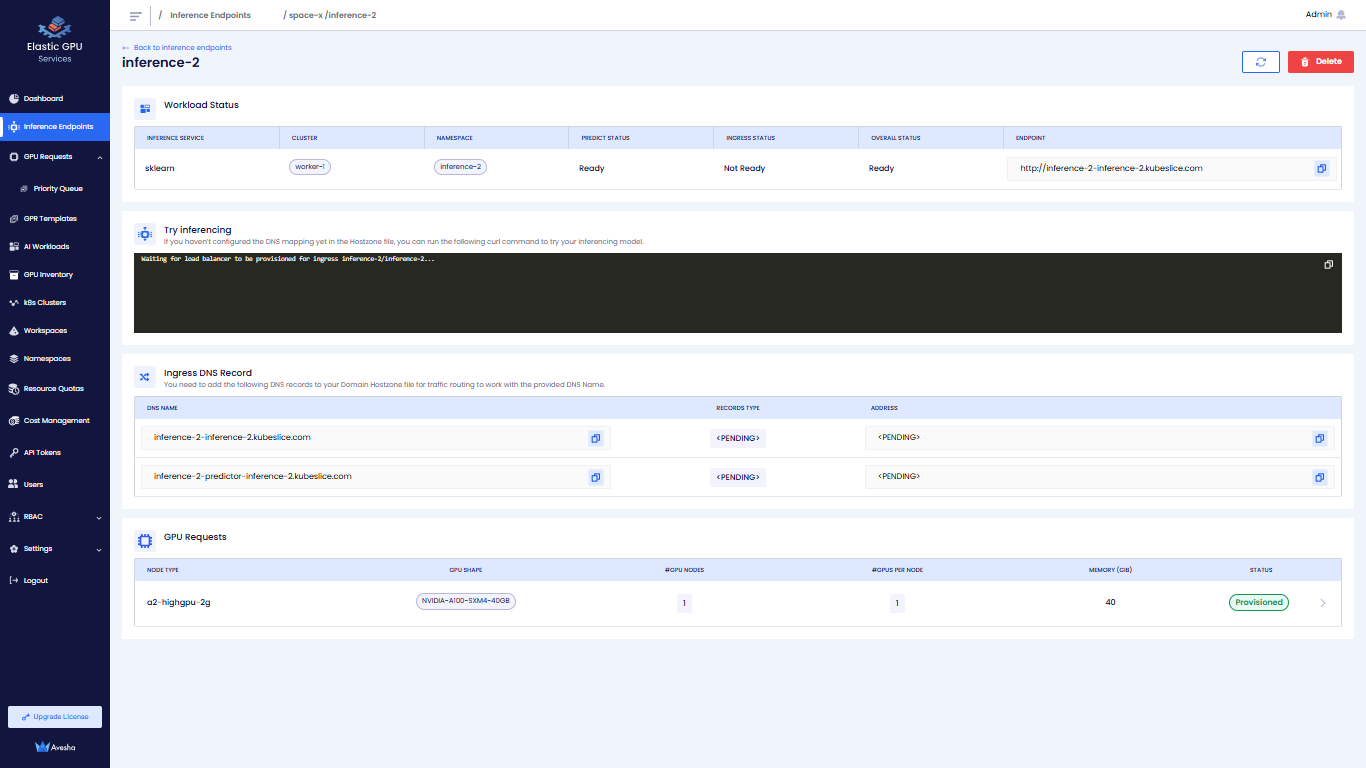

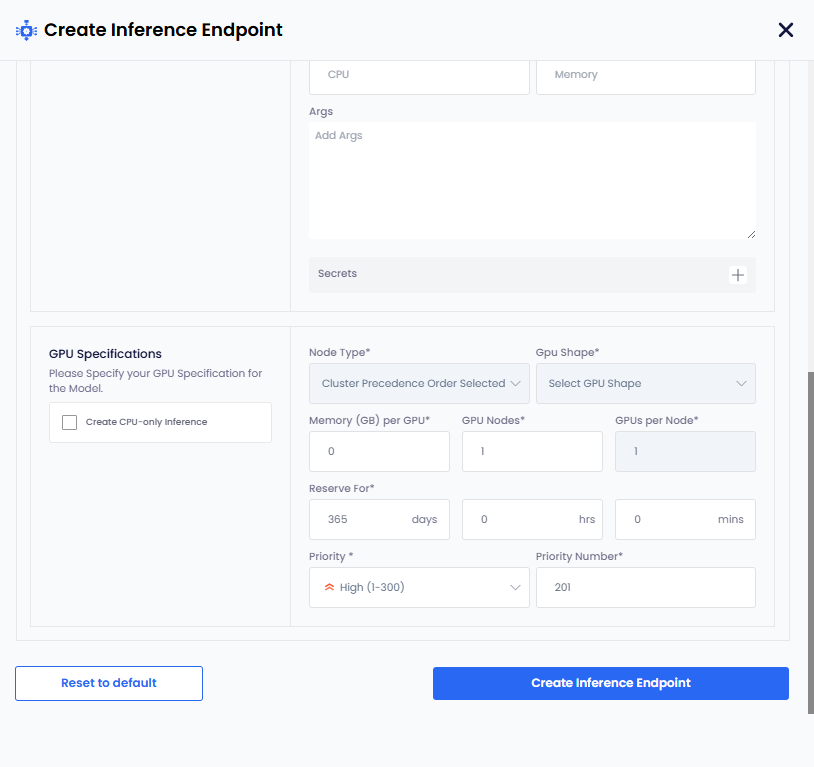

Under GPU Specifications:

infoIf you only want CPU-based inference, then select the Create CPU-only Inference checkbox.

-

Select node type from the Node Type drop-down list.

-

GPU Shape and Memory per GPU get auto populated.

-

The parameters, GPU Nodes and GPUs Per Node have default values. Change them if you want non-default values.

-

The Reserve For duration parameter in

DDHHMMcontains a default value of 365 days.infoThe maximum duration is 365 days. Change the duration to less than 365 days.

-

The Priority parameter has a default value. Select a different priority (low: 1-100, medium: 1-200, high: 1-300) from the dropdown list.

-

Set a different priority number as per the priority set. This parameter also contains a default value as per the default priority.

-

Click the Create Inference Endpoint button. The status goes to Pending before it changes to Ready.

-

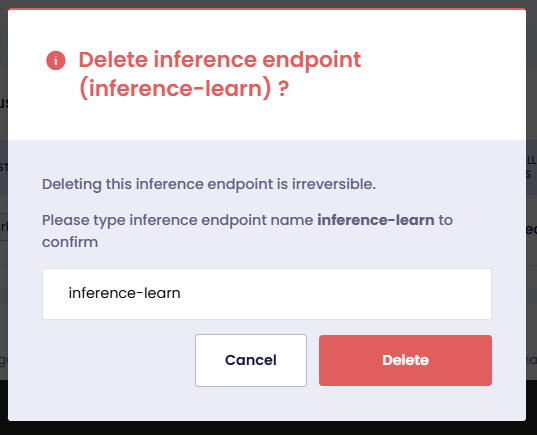

Delete an Inference Endpoint

-

On the Workspaces page, select a workspace for which you want to delete an Inference Endpoint.

-

On the Inference Endpoint page, select the deployment name or click the right arrow next to the Inference Endpoint.

-

click the Delete button.

-

Enter the name of the Inference Endpoint in the text box and click Delete.

Configure the ConfigMap for Selecting Standard Models

To ensure the model selection dropdown is populated correctly, follow these steps:

-

Create the ConfigMap in the project namespace. For example,

kubeslice-aveshais the project namespace. -

To identify the ConfigMap as a standard inference model specification, the label

egs.kubeslice.io/type: model-specsmust be added to the ConfigMap metadata. -

The data section of the ConfigMap must contain the key

model-specs.yaml. This key holds the specification of the model in YAML format.The following are the three example ConfigMaps:

Example 1: llama3-8b with Custom InferenceService and GPU Specifications

apiVersion: v1

kind: ConfigMap

metadata:

name: llama3-8b

namespace: kubeslice-avesha

labels:

egs.kubeslice.io/type: model-specs

data:

model-specs.yaml: |

specsType: "CUSTOM"

rawModelSpecs: |

kind: InferenceService

metadata:

name: huggingface-llama3

spec:

predictor:

model:

modelFormat:

name: huggingface

args:

- --model_name=llama3

- --model_id=meta-llama/meta-llama-3-8b-instruct

resources:

limits:

cpu: "6"

memory: 24Gi

nvidia.com/gpu: "1"

requests:

cpu: "6"

memory: 24Gi

nvidia.com/gpu: "1"

env:

- name: HF_TOKEN

valueFrom:

secretKeyRef:

name: hf-secret

key: HF_TOKEN

optional: false

gpuSpecs:

memory: 24

totalNodes: 1

gpusPerNode: 1Example 2: sklearn-iris with GPU specifications

apiVersion: v1

kind: ConfigMap

metadata:

name: sklearn-iris

namespace: kubeslice-avesha

labels:

egs.kubeslice.io/type: model-specs

data:

model-specs.yaml: |

specsType: "STANDARD"

modelSpecs:

modelFormat: "sklearn"

storageUri: "gs://kfserving-examples/models/sklearn/1.0/model"

args:

- --model_name=sklearn-iris

- --model_id=sklearn/iris

secrets:

SK_LEARN_TOKEN: xxx-yyyy-zzz

cpu: "1"

memory: "2Gi"

gpuSpecs:

memory: 6

totalNodes: 1

gpusPerNode: 1Example 3: sklearn without GPU

apiVersion: v1

kind: ConfigMap

metadata:

name: sklearn-iris-cpu

namespace: kubeslice-avesha

labels:

egs.kubeslice.io/type: model-specs

data:

model-specs.yaml: |

specsType: "STANDARD"

modelSpecs:

modelFormat: "sklearn"

storageUri: "gs://kfserving-examples/models/sklearn/1.0/model -

Use the following command to apply the ConfigMap:

kubectl apply -f example-model-configmap.yaml -

Use the following command to verify if the ConfigMap is created correctly:

kubectl get configmap -n kubeslice-avesha -l egs.kubeslice.io/type=model-specsThis command lists all ConfigMaps with the required label in the specified namespace. If your ConfigMap appears, it is correctly configured for use in the model selection dropdown.

Bursting Scenarios

-

When Burst to Available Clusters option is enabled and if no worker clusters are selected, the workload is assigned to the cluster with sufficient inventory and the shortest wait time.

-

When Burst to Available Clusters option is disabled:

- At least one cluster must be selected to process the workload.

- For a single selected cluster, the user must specify both the GPU Node type.

- For multiple selected clusters, the workload is assigned to the cluster with sufficient inventory and the shortest wait time among the selected options.